A few weeks ago the New York Times wrote about a paper we had submitted to the British Journal of Sports Medicine calling for Bermon and Garnier (2017, BG17) to be retracted. You can get the back story at the links in the previous sentence, but two things to understand up front:

- BG17 is not just any old scientific paper -- it is the only scientific basis for regulations to be implemented by the International Association of Athletics Federation (IAAF) governing naturally occurring testosterone in female athletes.

- Calling for a retraction of a scientific paper is not something to be done lightly. BG17 is the first paper that I have called on publicly to be retracted in 25+ years of publishing, reviewing, serving on editorial boards and studying science in policy. Yes, it is that bad.

Today the editor of BJSM emailed with the following information (which are quoted in full from his message):

1. The BJSM editorial team has considered the various points raised to us about retracting BG17 (including yours) and stand by our decision that retraction would be inappropriate.No retraction, no sharing of data.

2. We respect the authors’ decision not to open these data even though we support the general principle of data sharing.

When should a paper be retracted? Fortunately, the publisher of BJSM has a policy on retraction which states:

Retractions are considered by journal editors in cases of evidence of unreliable data or findings, plagiarism, duplicate publication, and unethical research.This retraction policy is similar to the recommendation of the Committee on Publication Ethics (COPE), whose guidelines are followed by most scientific publishers (PDF):

Retraction is a mechanism for correcting the literature and alerting readers to publications that contain such seriously flawed or erroneous data that their findings and conclusions cannot be relied upon.Why have we called for BJSM to retract BG17? Because of seriously flawed and erroneous data such that the paper's conclusions cannot be relied on. This is such a clear case that it is baffling why BJSM has chosen not only to let the paper stand, but to not require the paper's flawed data to be shared openly.

Why is the case so clear?

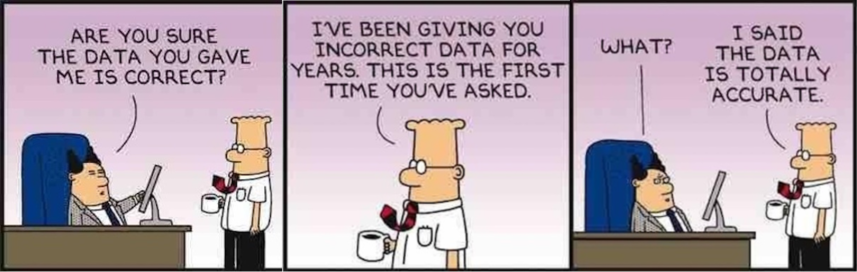

- The lead author of BG17 shared 25% of their data with us, and we documented pervasive errors in the data set. And it wasn't just a few errors, fully 17% to 33% of the data that we examined by IAAF event was bad data.

- The lead author of BG17 admitted in an email to me and the editor of BJSM that their dataset contained errors.

- In a subsequent letter published in BJSM (Bermon at al. 2018, or BHKE18) the authors admit that there were 230 data points dropped from BG17, or almost 18% of the total data. Even this admission was in error as there were only 220 data points dropped.

- The 220 dropped data points represent only a subset of the types of errors that we identified. Based on our analysis of 25% of the original data, there are no doubt many more in the other 75%.

An editorial board should be so lucky as to have such a clear cut case. It's a no brainer. The message to BG17 should be: Sorry guys but this effort is so flawed that we are going to pull it. End of story.

So why did the BJSM editorial board act as they did? I have no insight on their internal deliberations, but given the retraction policy of the publisher of BJSM and the ethical guidelines suggested by COPE, there logically can be only three possibilities.

- The BJSM editorial board disagrees with our analysis and the statement of the lead author of BG17 that there are pervasive errors underlying the original analysis. This would be a very odd position to take, as it is contrary to both evidence and the admission of the researchers who wrote BG17 and BHKE18.

- The BJSM editorial board accepts that there are pervasive errors in BG17 and has decided to let the paper stand regardless. This too would be an odd position to take, as it is unethical and unscientific (according to COPE) and contrary to the retraction policy that BJSM is expected to follow. No scientific publisher worthy of the title would let flawed science stand.

- The BJSM editorial board is uncertain about the presence of pervasive errors in BG17 and in the face of this uncertainty has decided to let the paper stand. This would be an exceptionally odd position to take in light of the fact that BJSM has concluded (emphasis added), "We respect the authors’ decision not to open these data even though we support the general principle of data sharing." A really good way to understand the true depth of data errors would be for BJSM to require the authors of BG17 to release fully 100% of their data that has no privacy concerns.

So which is it?

The bottom line here is that BJSM has failed in its core scientific obligations. By all appearances BJSM is acting in the interests of IAAF and protecting IAAF research from normal scientific scrutiny. I have no idea why this is so, but it is a subject that I'll continue to pursue.

Ross Tucker, Erik Boye and I will be revising our submission to BJSM and will ask to have it reviewed, published and linked to BG17. Obvious more to come, stay tuned.

(Note: This post represents my views only, though everyone is welcome to share them.)

(Note: This post represents my views only, though everyone is welcome to share them.)