Here is a summary of the models from the FT:

- Emily Williams, a PhD candidate at London Business School updated a model first developed by Andrew Bernard of the Tuck School of Business at Dartmouth for the 2000 games in Sydney. It focuses on population, income per capita, total GDP, and host-nation advantage to explain Olympic performace.

- Daniel Johnson, an economist at Colorado College has used a similar model to publish predictions for Olympics for several years. A change to his approach for the 2012 adds a host-nation effect that pre-dates and post-dates the current games, so China and Brazil should benefit in addition to Great Britain), and eliminates an earlier focus on political structures and climate.

- José Ursúa and Kamakshya Trivedi of Goldman Sachs, who, as part of a recently-published note on the economics of the Olympics, highlighted the strength of the host-nation advantage in certain sports and used the bank’s “Growth Environment Score”, a proprietary summary statistic used to score countries’ institutional structures, as a key independent variable.

- PWC’s briefing paper Modelling Olympic Performance, which found population, average income levels (measured by GDP per capita at PPP exchange rates), ex-Soviet status and host-nation effect to be statistically-significant factors.

In the evaluation summarized in the graph at the top of this post I present results for two naïve baselines. One is simply the unadjusted results of the 2008 Olympic games (2008 Actual) and the second is a simple adjustment to the 2008 games (Fancy Naive) described below.

|

| Add caption |

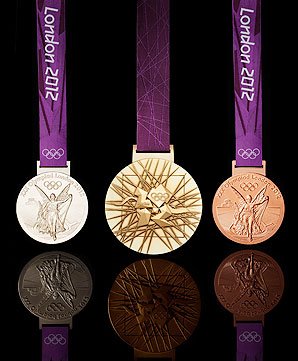

- Gold Medal – Consensus Forecast

- Silver Medal – Goldman Sachs

- Bronze Medal – PriceWaterhouseCoopers

- Did not place: Johnson and Williams

In this evaluation I looked only at the top 25 medal-winning countries, for 2012 taking us down to Azerbaijan with 10 medals. It is possible that the rankings might shift looking all the way down the list. Further, not all predictions covered all countries, so of the top 25 medal winners, only 18 appeared across all predictions.

In addition, to explored the robustness of the evaluation, I explored the top 25 medal winners in 2008 and 2012, and I also looked at skill with respect to the top 18 minus China and the UK, and only with respect to China and the UK. The reason for singling out these two countries is that as hosts of the 2008 and 2012 Olympics, they experienced a big increase in medals won as the home nation. The results I found were largely robust to these various permutations.

Just for fun, I introduced a slightly more rigorous baseline for the naïve prediction (Fancy Naive), which assumed that the host country experiences a 15% boost in medals over 2008 and the 2008 host experiences a 15% decrease. All other elements of the naïve prediction remained the same. As you might expect, this slight variation created a much more rigorous baseline of skill, which has the result of coming in very, very close to (but not beating) the GS and PWC forecasts. The difference is slim enough to conclude that there is exceedingly little added value in either forecast. It would not be difficult to produce a slightly more sophisticated version of the naive forecast that would beat all of the individual models.

However, the consensus forecast handily beat the more rigorous baseline. This deserves some additional consideration -- how is it that 4 models with no or marginal skill aggregate to produce a forecast with skill? My initial hypothesis is that the distribution of errors is highly skewed -- each method tends to badly miss a few country predictions. Such errors are reduced by the averaging across the four methods, resulting in a higher skill score. I would also speculate that tossing out the two academic models, having no skill, would lead to a further improvement in the skill score. Of course, the skill in the Consensus forecast might also just be luck. If I have some time I'll explore these various hypotheses.

The skillful performance of GS and PWC does have commentators speculating as to whether they may have been doping involved. That analysis awaits another day.

Here are some additional results:

- The models did worst on Russia, Japan, China and the UK

- The models did best on Brazil, Canada, Kenya and Italy

- Every model had several predictions right on target (error of 0 or 1)

- Every model had big errors for some countries

The verdict is mixed. Individually, very little. Together, some. Teasing out whether the ensemble demonstrates skill due to luck or design requires further work.

0 comments:

Post a Comment